Robots.Txt File Block All Search Engines

A Deeper Look At Robots. The Robots Exclusion Protocol REP is not exactly a complicated protocol and its uses are fairly limited, and thus its usually given short shrift by SEOs. Yet theres a lot more to it than you might think. Robots. txt has been with us for over 1. Googlebot obeys That noindexed pages dont end up in the index but disallowed pages do, and the latter can show up in the search results albeit with less information since the spiders cant see the page content That disallowed pages still accumulate Page. Robots.Txt File Block All Search Engines' title='Robots.Txt File Block All Search Engines' />

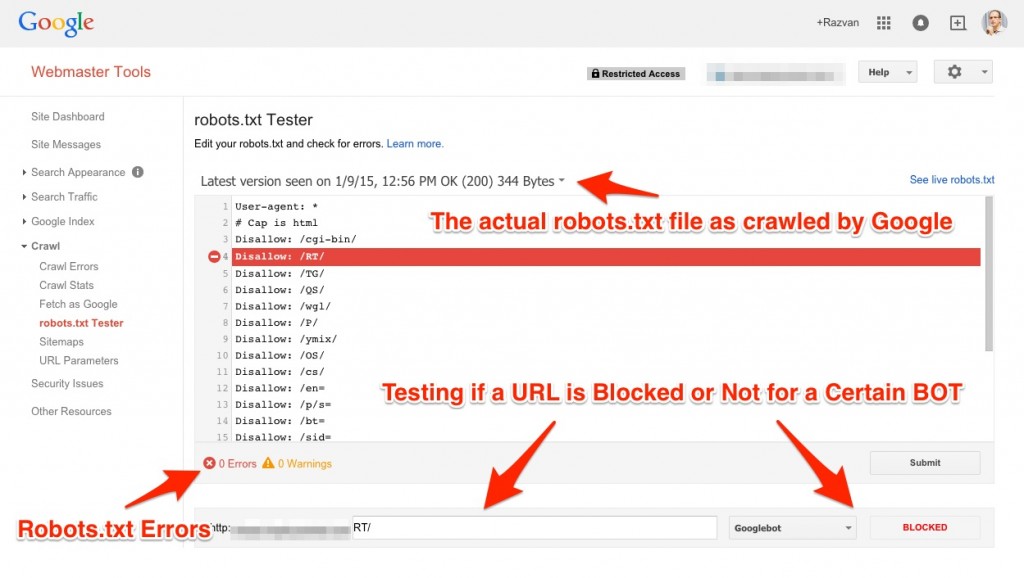

The Devilfinder Search Engine Offering web search, online games, email, free blogs websites, videos movies, images, news and weather. But none of that will matter if the spiders cant tell the search engines your pages are there in the first place, meaning they wont show up in search results. Rank That robots. That, because of that last feature, you can selectively disallow not just directories but also particular filetypes well, file extensions to be more exact That a robots. A robots. txt file provides critical information for search engine spiders that crawl the web. Before these bots does anyone say the full word robots anymore access pages of a site, they check to see if a robots. Doing so makes crawling the web more efficient, because the robots. Having a robots. txt file is a best practice. Even just for the simple reason that some metrics programs will interpret the 4. But what goes in that robots. Thats the crux of it. Both robots. txt and robots meta tags rely on cooperation from the robots, and are by no means guaranteed to work for every bot. If you need stronger protection from unscrupulous robots and other agents, you should use alternative methods such as password protection. Too many times Ive seen webmasters naively place sensitive URLs such as administrative areas in robots. You better believe robots. Emergency 4 Mod'>Emergency 4 Mod. Robots. txt works well for Barring crawlers from non public parts of your website. Barring search engines from trying to index scripts, utilities, or other types of code. Avoiding the indexation of duplicate content on a website, such as print versions of html pages. Auto discovery of XML Sitemaps. At the risk of being Captain Obvious, the robots. A robots. txt file located in a subdirectory isnt valid, as bots only check for this file in the root of the domain. Creating a robots. You can create a robots. It should be an ASCII encoded text file, not an HTML file. Robots. txt syntax. User Agent the robot the following rule applies to e. Googlebot, etc. Disallow the pages you want to block the bots from accessing as many disallow lines as neededNoindex the pages you want a search engine to block AND not index or de index if previously indexed. Unofficially supported by Google unsupported by Yahoo and Live Search. Each User AgentDisallow group should be separated by a blank line however no blank lines should exist within a group between the User agent line and the last Disallow. The hash symbol may be used for comments within a robots. May be used either for whole lines or end of lines. Directories and filenames are case sensitive private, Private, and PRIVATE are all uniquely different to search engines. Lets look at an example robots. The example below includes The robot called Googlebot has nothing disallowed and may go anywhere. The entire site is closed off to the robot called msnbot All robots other than Googlebot should not visit the tmp directory or directories or files called logs, as explained with comments, e. User agent Googlebot. Disallow User agent msnbot. Disallow Block all robots from tmp and logs directories. User agent Disallow tmpDisallow logs for directories and files called logs. What should be listed on the User Agent line A user agent is the name of a specific search engine robot. You can set an entry to apply to a specific bot by listing the name or you can set it to apply to all bots by listing an asterisk, which acts as a wildcard. An entry that applies to all bots looks like this User Agent ajor robots include Googlebot Google, Slurp Yahoo, msnbot MSN, and TEOMA Ask. Bear in mind that a block of directives specified for the user agent of Googlebot will be obeyed by Googlebot but Googlebot will NOT ALSO obey the directives for the user agent of all bots. What should be listed on the Disallow line The disallow lists the pages you want to block. You can list a specific URL or a pattern. The entry should begin with a forward slash. Examples To block the entire site Disallow To block a directory and everything in it Disallow privatedirectoryTo block a page Disallow privatefile. To block a page andor a directory named private Disallow private. If you serve content via both http and https, youll need a separate robots. For example, to allow robots to index all http pages but no https pages, youd use the robots. User agent isallow And for the https protocol User agent isallow Bots check for the robots. The rules in the robots. How often it is accessed varies on how frequently the bots spider the site based on popularity, authority, and how frequently content is updated. Some sites may be crawled several times a day while others may only be crawled a few times a week. Google Webmaster Central provides a way to see when Googlebot last accessed the robots. Id recommend using the robots. Google Webmaster Central to check specific URLs to see if your robots. Googlebot had trouble parsing any lines in your robots. Some advanced techniques. The major search engines have begun working together to advance the functionality of the robots. As alluded to above, there are some functions that have been adopted by the major search engines, and not necessarily all of the major engines, that provide for finer control over crawling. Free Employee Handbook Guidelines'>Free Employee Handbook Guidelines. As these may be limited though, do exercise caution in their use. Crawl delay Some websites may experience high amounts of traffic and would like to slow search engine spiders down to allow for more server resources to meet the demands of regular traffic. Crawl delay is a special directive recognized by Yahoo, Live Search, and Ask that instructs a crawler on the number of seconds to wait between crawling pages User agent msnbot. Crawl delay 5. Pattern matching At this time, pattern matching appears to be usable by the three majors Google, Yahoo, and Live Search. The value of pattern matching is considerable. Lets look first at the most basic of pattern matching, using the asterisk wildcard character. To block access to all subdirectories that begin with private User agent Googlebot. Disallow privateYou can match the end of the string using the dollar sign. For example, to block URLs that end with. User agent Googlebot. Disallow aspUnlike the more advanced pattern matching found in regular expressions in Perl and elsewhere, the question mark does not have special powers. So, to block access to all URLs that include a question mark, simply use the question mark no need to escape it or precede it with a backslash User agent isallow To block robots from crawling all files of a specific file type for example,. User agent isallow Heres a more complicated example. Add Network Drivers To Esxi 6. Lets say your site uses the query string part of the URLs what follows the solely for session IDs, and you want to exclude all URLs that contain the dynamic parameter to ensure the bots dont crawl duplicate pages. But you may want to include any URLs that end with a. Heres how youd accomplish that User agent Slurp.

The Devilfinder Search Engine Offering web search, online games, email, free blogs websites, videos movies, images, news and weather. But none of that will matter if the spiders cant tell the search engines your pages are there in the first place, meaning they wont show up in search results. Rank That robots. That, because of that last feature, you can selectively disallow not just directories but also particular filetypes well, file extensions to be more exact That a robots. A robots. txt file provides critical information for search engine spiders that crawl the web. Before these bots does anyone say the full word robots anymore access pages of a site, they check to see if a robots. Doing so makes crawling the web more efficient, because the robots. Having a robots. txt file is a best practice. Even just for the simple reason that some metrics programs will interpret the 4. But what goes in that robots. Thats the crux of it. Both robots. txt and robots meta tags rely on cooperation from the robots, and are by no means guaranteed to work for every bot. If you need stronger protection from unscrupulous robots and other agents, you should use alternative methods such as password protection. Too many times Ive seen webmasters naively place sensitive URLs such as administrative areas in robots. You better believe robots. Emergency 4 Mod'>Emergency 4 Mod. Robots. txt works well for Barring crawlers from non public parts of your website. Barring search engines from trying to index scripts, utilities, or other types of code. Avoiding the indexation of duplicate content on a website, such as print versions of html pages. Auto discovery of XML Sitemaps. At the risk of being Captain Obvious, the robots. A robots. txt file located in a subdirectory isnt valid, as bots only check for this file in the root of the domain. Creating a robots. You can create a robots. It should be an ASCII encoded text file, not an HTML file. Robots. txt syntax. User Agent the robot the following rule applies to e. Googlebot, etc. Disallow the pages you want to block the bots from accessing as many disallow lines as neededNoindex the pages you want a search engine to block AND not index or de index if previously indexed. Unofficially supported by Google unsupported by Yahoo and Live Search. Each User AgentDisallow group should be separated by a blank line however no blank lines should exist within a group between the User agent line and the last Disallow. The hash symbol may be used for comments within a robots. May be used either for whole lines or end of lines. Directories and filenames are case sensitive private, Private, and PRIVATE are all uniquely different to search engines. Lets look at an example robots. The example below includes The robot called Googlebot has nothing disallowed and may go anywhere. The entire site is closed off to the robot called msnbot All robots other than Googlebot should not visit the tmp directory or directories or files called logs, as explained with comments, e. User agent Googlebot. Disallow User agent msnbot. Disallow Block all robots from tmp and logs directories. User agent Disallow tmpDisallow logs for directories and files called logs. What should be listed on the User Agent line A user agent is the name of a specific search engine robot. You can set an entry to apply to a specific bot by listing the name or you can set it to apply to all bots by listing an asterisk, which acts as a wildcard. An entry that applies to all bots looks like this User Agent ajor robots include Googlebot Google, Slurp Yahoo, msnbot MSN, and TEOMA Ask. Bear in mind that a block of directives specified for the user agent of Googlebot will be obeyed by Googlebot but Googlebot will NOT ALSO obey the directives for the user agent of all bots. What should be listed on the Disallow line The disallow lists the pages you want to block. You can list a specific URL or a pattern. The entry should begin with a forward slash. Examples To block the entire site Disallow To block a directory and everything in it Disallow privatedirectoryTo block a page Disallow privatefile. To block a page andor a directory named private Disallow private. If you serve content via both http and https, youll need a separate robots. For example, to allow robots to index all http pages but no https pages, youd use the robots. User agent isallow And for the https protocol User agent isallow Bots check for the robots. The rules in the robots. How often it is accessed varies on how frequently the bots spider the site based on popularity, authority, and how frequently content is updated. Some sites may be crawled several times a day while others may only be crawled a few times a week. Google Webmaster Central provides a way to see when Googlebot last accessed the robots. Id recommend using the robots. Google Webmaster Central to check specific URLs to see if your robots. Googlebot had trouble parsing any lines in your robots. Some advanced techniques. The major search engines have begun working together to advance the functionality of the robots. As alluded to above, there are some functions that have been adopted by the major search engines, and not necessarily all of the major engines, that provide for finer control over crawling. Free Employee Handbook Guidelines'>Free Employee Handbook Guidelines. As these may be limited though, do exercise caution in their use. Crawl delay Some websites may experience high amounts of traffic and would like to slow search engine spiders down to allow for more server resources to meet the demands of regular traffic. Crawl delay is a special directive recognized by Yahoo, Live Search, and Ask that instructs a crawler on the number of seconds to wait between crawling pages User agent msnbot. Crawl delay 5. Pattern matching At this time, pattern matching appears to be usable by the three majors Google, Yahoo, and Live Search. The value of pattern matching is considerable. Lets look first at the most basic of pattern matching, using the asterisk wildcard character. To block access to all subdirectories that begin with private User agent Googlebot. Disallow privateYou can match the end of the string using the dollar sign. For example, to block URLs that end with. User agent Googlebot. Disallow aspUnlike the more advanced pattern matching found in regular expressions in Perl and elsewhere, the question mark does not have special powers. So, to block access to all URLs that include a question mark, simply use the question mark no need to escape it or precede it with a backslash User agent isallow To block robots from crawling all files of a specific file type for example,. User agent isallow Heres a more complicated example. Add Network Drivers To Esxi 6. Lets say your site uses the query string part of the URLs what follows the solely for session IDs, and you want to exclude all URLs that contain the dynamic parameter to ensure the bots dont crawl duplicate pages. But you may want to include any URLs that end with a. Heres how youd accomplish that User agent Slurp.